Introduction: A World Rewired by Innovation

At the dawn of the 21st century, humanity stood at the threshold of a transformation unlike any before. The 1900s had given us electricity, radio, automobiles, and the early computer — but the year 2000 ignited a new era altogether. The following decades became a technological renaissance, where digital innovation didn’t just change how we live; it redefined what it means to be human. From the rise of artificial intelligence to the evolution of biotechnology, the world has entered a state of perpetual acceleration.

The rhythm of life, once guided by natural cycles, is now driven by the pulse of processors, the hum of servers, and the invisible flow of data across continents. This blog explores how technology in the 21st century has reshaped our world — not only through invention but through reinvention of every aspect of human experience: communication, health, economy, creativity, and even consciousness itself.

The Digital Dawn: When the Internet Became the New World

The early 2000s were a frontier. The internet had existed for decades, but it wasn’t until the new millennium that it became a global necessity. Dial-up connections gave way to broadband, and suddenly, information was not a luxury — it was an expectation.

The internet became the modern printing press. Knowledge that had once been limited to libraries and universities was suddenly available to anyone with a screen and a connection. Businesses began to realize that a website was more valuable than a storefront, and people learned that identity could extend beyond the physical world.

Social media emerged as the defining cultural force. What started as personal profiles and simple friend lists became massive digital ecosystems, where billions now interact, share, and express themselves daily. Facebook, Twitter, Instagram, and later TikTok, transformed from communication tools into cultural engines. Humanity, once separated by geography, began to live in a shared digital consciousness.

But with this newfound unity came new challenges — misinformation, data exploitation, and the slow erosion of privacy. The same technology that connected us also began to control us, subtly shaping our thoughts through algorithms designed to predict and influence behavior. The internet became not just a reflection of humanity, but a force shaping its evolution.

The Smartphone Revolution: A Computer in Every Hand

If the internet was the digital dawn, the smartphone was its morning sun. The first iPhone, released in 2007, didn’t just change technology — it changed behavior. Suddenly, the world was in our pockets. Communication, entertainment, work, and even love were compressed into glowing rectangles of glass and silicon.

The smartphone blurred the boundary between online and offline. We stopped “logging on” — we were always connected. Apps replaced objects; wallets became digital, maps became interactive, and cameras became portals to memory. The convenience was intoxicating, but the implications were profound.

Attention became the new currency. Companies realized that the most valuable resource was not oil, gold, or even data — it was time. Every notification, every vibration, every scroll was designed to capture one more second of our attention. The human mind became the marketplace, and the smartphone the medium of exchange.

Yet, despite the criticisms of addiction and distraction, the smartphone remains one of humanity’s most powerful tools. It empowers citizens to record injustice, enables remote learning, and connects families across oceans. It is both a symbol of modern dependence and modern empowerment — a paradox that defines the technological age.

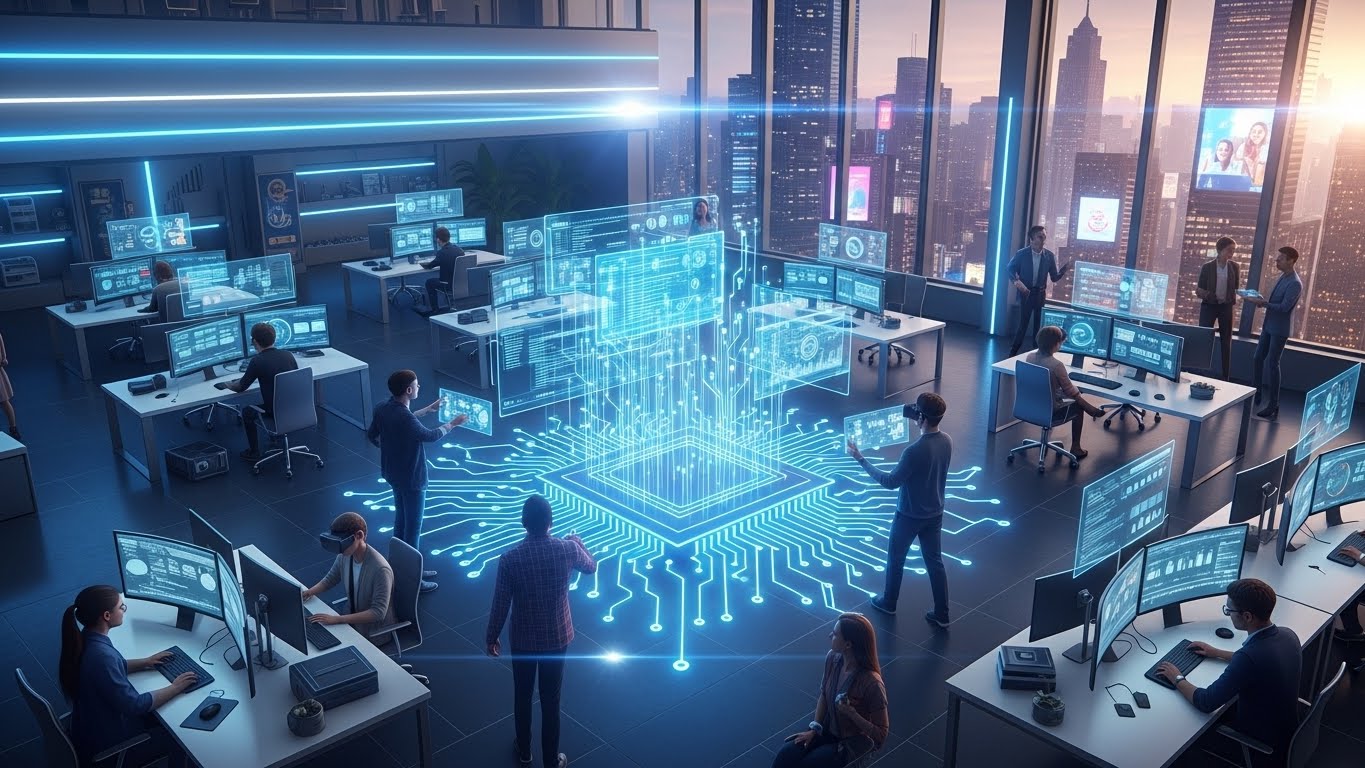

The Rise of Artificial Intelligence: Machines That Learn

Perhaps the most transformative force of the 21st century has been artificial intelligence. While once confined to science fiction, AI has rapidly evolved into a ubiquitous presence. Machine learning algorithms now drive search engines, curate entertainment, detect diseases, and even compose music.

The early 2010s saw the first wave of AI-powered breakthroughs. Neural networks, inspired by the human brain, began outperforming traditional software in recognizing speech, translating languages, and analyzing patterns. Then came deep learning — systems capable of teaching themselves from data, unlocking levels of sophistication once thought impossible.

AI now sits at the heart of nearly every industry. In healthcare, it assists doctors by identifying tumors invisible to the human eye. In transportation, it powers autonomous vehicles that can navigate crowded cities. In finance, it predicts market movements faster than any analyst. And in creativity, it collaborates with artists to generate poetry, art, and film.

Yet, as AI grows more capable, it raises ethical questions. What happens when machines can replicate human thought? Should algorithms decide who gets a loan, a job, or even parole? And as automation accelerates, what becomes of the human workforce?

The promise of AI is vast, but so is its peril. It mirrors humanity’s own dual nature — capable of creation and destruction, progress and harm. Whether AI becomes our greatest ally or our most dangerous invention depends entirely on how we wield it.

The Cloud Era: Storing the World’s Memory

Before the cloud, data was local. Files lived on hard drives, and loss meant oblivion. But the rise of cloud computing changed everything. Data was no longer bound by hardware; it became fluid, omnipresent, and accessible from anywhere.

The cloud is the silent engine of modern life. Every photo uploaded, every document shared, every streaming video — all are powered by vast networks of servers hidden in remote data centers. These invisible infrastructures hold the collective memory of humanity.

Businesses embraced the cloud to scale globally without physical constraints. Collaboration became seamless, enabling people across continents to work together in real time. During global crises like the COVID-19 pandemic, cloud services became the backbone of remote work and education, keeping the world connected even in isolation.

However, this convenience comes at a cost. Centralized data raises concerns about privacy, security, and control. When our memories, transactions, and communications live on servers owned by corporations, who truly owns them? The cloud represents both freedom and dependency — the freedom to access, and the dependency on those who provide access.

The Internet of Things: A Connected World of Objects

The internet was once a web of computers. Now, it is a web of everything. The Internet of Things (IoT) has transformed ordinary objects — refrigerators, thermostats, watches, cars — into smart devices that communicate and learn.

This silent revolution has reshaped industries and homes alike. Smart homes adjust temperature and lighting automatically. Wearables track health in real time, alerting users to anomalies before they become illnesses. Cities use connected infrastructure to manage traffic, reduce energy consumption, and enhance safety.

Yet, the more connected the world becomes, the more vulnerable it grows. Every smart device is a potential entry point for cyberattacks, and every byte of data collected contributes to an expanding digital profile of individuals. The line between convenience and surveillance grows thinner each year.

Still, the IoT continues to expand, embedding intelligence into the fabric of daily life. It represents the next phase of digital evolution — not just connecting people, but connecting existence itself.

Biotechnology: Engineering Life Itself

While digital technology reshapes the virtual world, biotechnology reshapes the physical one. Advances in genetic engineering, synthetic biology, and medical technology are rewriting the code of life.

The mapping of the human genome in the early 2000s unlocked the blueprint of our biology. Since then, breakthroughs like CRISPR have made it possible to edit genes with precision once unimaginable. Scientists can now correct genetic disorders, engineer crops for resilience, and even explore the revival of extinct species.

In medicine, technology is converging with biology. Artificial organs, robotic surgery, and bioinformatics have transformed healthcare from reactive to predictive. Personalized medicine uses genetic data to tailor treatments to individual patients, ushering in an age where prevention may replace cure.

However, the ethical dilemmas are immense. Where is the line between healing and enhancement? Should humans have the power to design their offspring or extend life indefinitely? As biotechnology advances, it forces humanity to confront the deepest philosophical question of all — not just how to live, but what life should be.

The Data Deluge: Information as Power

In the 21st century, data has become the new oil — a resource that fuels economies, drives innovation, and determines global power. Every click, purchase, movement, and conversation generates data. The digital world watches, records, and analyzes, turning human behavior into measurable patterns.

Big data analytics allows companies to predict consumer desires, governments to track pandemics, and scientists to model climate change. But this data-driven society also risks turning individuals into commodities. Personal information, once private, has become the currency of the modern world.

The question of data ethics grows more urgent each year. Who controls the flow of information? Who benefits from it? And who is left behind in a world where algorithms decide value?

Data has empowered humanity to understand itself in ways never before possible — but it has also exposed vulnerabilities in privacy, democracy, and autonomy. The balance between insight and intrusion defines the digital age’s moral frontier.

The Energy of Innovation: Powering the Digital Age

Every byte of data, every digital interaction, consumes energy. As the world grows more connected, the demand for electricity surges. The challenge of the 21st century is not just to innovate, but to sustain innovation.

Renewable energy technologies have emerged as vital partners to digital growth. Solar, wind, and hydroelectric power are reshaping global energy landscapes, supported by advances in battery storage and smart grids. Data centers, once notorious for massive energy consumption, are now striving for carbon neutrality through efficiency and innovation.

The race toward sustainability has also sparked new industries — from electric vehicles to clean hydrogen production. Technology is not only a consumer of energy but also a creator of solutions. The same ingenuity that built the digital world may be the key to preserving the physical one.

The Future of Work: Humans and Machines Together

Automation has always been part of technological progress, but artificial intelligence and robotics have accelerated it to new levels. Factories, offices, and even creative industries are seeing machines perform tasks once thought uniquely human.

This transformation has sparked both fear and opportunity. On one hand, automation threatens traditional jobs and deepens inequality. On the other, it liberates people from repetitive labor, opening paths to new forms of creativity and innovation.

The future of work will likely not be a battle between humans and machines, but a collaboration. AI can handle computation and pattern recognition, while humans provide emotion, intuition, and ethics. The real challenge lies in preparing societies for this hybrid reality — through education, adaptability, and new economic systems that value human contribution beyond labor.

Cybersecurity and the Invisible War

Every technological leap invites new forms of conflict. In the digital age, wars are no longer fought only on land, sea, or air — but in cyberspace.

Cybersecurity has become a critical concern for governments, corporations, and individuals alike. Hacking, data breaches, and ransomware attacks threaten financial systems, healthcare networks, and even national security. The digital battlefield is invisible but omnipresent, and its weapons are lines of code rather than bullets.

As our dependence on technology deepens, so too must our defenses. Encryption, AI-driven threat detection, and international cooperation will define the next phase of digital security. Yet the most important defense remains awareness — the understanding that in a connected world, every user plays a role in safeguarding the system.

The Metaverse: Beyond the Physical World

In recent years, the concept of the metaverse has captured global imagination — a virtual universe where people can live, work, and play. While still in its infancy, the metaverse represents the next frontier of digital experience.

Virtual reality, augmented reality, and blockchain technologies are converging to create spaces that blur the boundaries between physical and digital existence. In these virtual realms, identity becomes fluid, economy becomes decentralized, and imagination becomes architecture.

Whether the metaverse becomes a digital utopia or a hyper-commercialized trap remains to be seen. But one thing is certain: humanity’s desire to create and inhabit new worlds is as old as civilization itself. Technology simply gives that dream a new dimension.

Ethics and the Human Element: The Soul in the Machine

As technology grows more powerful, the question of ethics becomes unavoidable. Innovation without morality risks dehumanization. Whether it’s AI decision-making, genetic modification, or digital surveillance, the moral implications of technology define its true impact.

Humanity must remain at the center of progress. Compassion, empathy, and responsibility are not programmable traits — they are cultivated through reflection and culture. The challenge of the 21st century is not just to create smarter machines, but wiser societies.

Ethical technology requires transparency, fairness, and inclusion. It demands that innovation serves not just profit, but purpose. The future belongs not to those who invent the most, but to those who invent with conscience.

The Next Horizon: Quantum and Beyond

Even as today’s technologies reshape the world, tomorrow’s are already emerging. Quantum computing, nanotechnology, and space exploration promise to open realms of possibility beyond imagination.

Quantum computers could solve problems that would take classical machines millennia. Nanotechnology could revolutionize medicine, manufacturing, and energy at the atomic level. And the renewed race to explore Mars and beyond may make humanity a multi-planetary species.

Each of these frontiers holds both promise and peril. As always, technology is a mirror — reflecting both our genius and our flaws. The path ahead depends not on what we can build, but on what we choose to build.

Conclusion: Technology as the Human Story

The story of technology is the story of humanity itself — a constant striving to transcend limitations, to know more, do more, and be more. The 21st century has seen progress at a pace that no previous era could imagine, yet it has also shown that progress is not purely technical; it is deeply human.

Every invention reshapes not just the world, but the people who inhabit it. Technology is not an external force acting upon us — it is a reflection of our desires, fears, and dreams. The machines we create, the systems we design, and the networks we build all echo the same ancient impulse: the will to connect, to evolve, to endure.

As we move deeper into this technological renaissance, the greatest challenge will not be how far we can go, but how wisely we travel. Because in the end, the future of technology is not about machines — it is about us.